When a self driving car accident happens, who is held responsible—the driver, the manufacturer, or the technology itself? This article dives into recent accidents, statistical trends, contributing factors, and the legal complexities of self-driving car accidents. If you have been injured in a motor vehicle accident involving a self-driving car, understanding these factors is crucial for your case.

Regular maintenance and software updates for autonomous vehicles are essential to ensure safety on public roads.

Key Takeaways

- Recent accidents involving self-driving vehicles, including a tragic case involving the Xiaomi SU7, raise significant concerns about the safety and reliability of autonomous technology, highlighting the substantial involvement of the tech sector in these incidents.

- Statistical analysis reveals that from 2019 to June 2024, there were over 3,900 crashes involving autonomous vehicles that resulted in 83 deaths, according to data from transportation safety organizations. Last year, several states passed new regulations to establish safety protocols for autonomous vehicles on public roads.

- Determining liability in self-driving car accidents is complicated, with multiple parties like manufacturers and software developers potentially responsible, showing a shift in blame from drivers to manufacturers as this technology keeps developing.

Recent Self Driving Car Accidents

Self-driving car accidents have been making headlines lately, and you can expect to see more coverage as these vehicles become common on our roadways. One of the most significant incidents was the crash of the Xiaomi SU7 on March 29, 2025, which sadly resulted in three university students losing their lives. This incident was the first reported fatality involving Xiaomi’s SU7 since they started selling it in March 2024. Learn how these accidents are raising serious questions about safety.

Another notable accident happened in 2023 in San Francisco, where a pedestrian was hit by a regular driver who fled the scene, before being run over by a Cruise autonomous vehicle. The large size and complex programming of these vehicles make them challenging to maneuver in unexpected situations like this one.

Tesla, a big player in the autonomous vehicle world, has also faced plenty of scrutiny due to frequent crashes involving their cars. Common causes include collisions with parked emergency vehicles and weird behaviors like phantom braking, where the vehicle brakes without any obvious reason.

The risks associated with autonomous vehicles are getting more attention with each incident. For instance, self-driving cars can be difficult to predict, particularly at intersections or in low-light conditions, as they may not react the same way as human drivers. Additionally, the complexity of this technology can make it hard for other drivers to anticipate their movements.

Statistics on Self Driving Car Accidents

In 2019 through June 2024, there were 3,979 crashes involving autonomous vehicles, with 83 deaths, according to transportation safety organizations. In 2022 alone, the highest number of self-driving car accidents was recorded at 1,450, showing how these incidents are increasing as more of these vehicles hit the road.

Tesla vehicles are a big part of these numbers, making up about 53.9% of all reported autonomous vehicle incidents from June 2021 to June 2024, with California seeing the most incidents. This highlights Tesla’s major role in the autonomous vehicle market while raising questions about their technology’s safety.

During this same period, 496 people were injured or killed in autonomous vehicle accidents. Summer is a particularly busy time for self-driving car testing, and you can expect to see more autonomous vehicles on urban roadways during these months. These numbers show why proper testing and safety measures are so important to protect both passengers and pedestrians.

Factors Contributing to Self Driving Car Accidents

The risks with self-driving cars have many causes, often mixing human mistakes and technology limitations. Human error is still a big factor, even with autonomous vehicles. For example, if you don’t take control when the car signals you should, you could be held responsible if there’s an accident.

Technology has its problems too. The Xiaomi SU7 crash showed major concerns about how well driver-assistance systems work in complicated traffic situations. In Level 2 automated vehicles, you must stay engaged and ready to take over anytime. But accidents still happen because humans need to intervene, people make mistakes, and today’s self-driving technology just isn’t perfect.

To reduce these risks, drivers should be careful when using self-driving features. This includes paying attention to the road, being ready to take control, and understanding what your vehicle’s autonomous features can and can’t do. Having the right understanding is crucial for the equipment to function properly.

Some self-driving cars, like the Xiaomi SU7, don’t have lidar sensors, which limits how well they can detect obstacles, especially when it’s dark. That’s one of many reasons to give these vehicles plenty of room and move cautiously around them in traffic.

Case Study: Xiaomi SU7 Fatal Crash

The Xiaomi SU7 fatal crash shows just how complicated self-driving car accidents can be. The crash happened when the driver took manual control just three seconds after getting a warning, resulting in hitting a guardrail.

This tragic accident killed three university students and has everyone talking about self-driving technology safety and who’s responsible—the people who make the cars or the people who drive them.

Investigation and Findings

Investigators looking into the Xiaomi SU7 crash found several important things. The car hit a concrete barrier while going really fast—about 97 km/h—after the driver tried to take over manually. Xiaomi’s emergency systems worked right after the impact, notifying authorities and the car’s owner.

But the vehicle’s emergency braking system didn’t work well during the accident and couldn’t prevent hitting the concrete barrier. Also, the car didn’t have lidar sensors, which made it harder to spot obstacles, especially in poor lighting. The automated braking system just wasn’t up to handling this situation.

Wide turns are sometimes necessary for autonomous vehicles, meaning they might move in unexpected ways before changing direction. Due to the nature of the equipment, self-driving systems may not detect all obstacles and may not process information fast enough. That is one of many reasons why giving these vehicles plenty of space is important.

Impact on Xiaomi

The crash hit Xiaomi hard and fast. They quickly put together a team to work with police on investigating what happened. Xiaomi has promised to be open about the process and help the families of those who died while the investigation continues.

After the crash, Xiaomi’s stock dropped a lot, losing over $16 billion in market value. The company has admitted they bear responsibility for the accident and are working with local authorities as they continue investigating.

Some autonomous vehicles are wider than a lane of traffic or have features that take up extra space. Drivers are asked to yield to these wide self-driving cars. If you see one coming in the oncoming lane, and there isn’t room for both of you, stop and pull over so the autonomous vehicle can pass safely.

Liability in Self Driving Car Accidents

Figuring out who’s to blame in self-driving car accidents is tricky and involves looking at multiple parties.

Potentially liable parties include:

- Manufacturers

- Software developers

- Vehicle owners

- Sometimes other parties

The NTSB’s investigation into an Uber crash showed that blame was shared among the company that made the vehicle, the developers of the software, the human backup driver, and transportation safety officials.

If you’ve been hurt in a self-driving car accident, you’ll likely need to seek compensation through personal injury claims to cover your medical bills and rehabilitation costs.

Detailed forensic investigations and evaluations of the vehicle’s hardware and software are essential in determining who’s at fault in self-driving accidents. Investors are paying close attention to these liability issues when they decide whether to put money into self-driving vehicle companies.

Manufacturer Responsibility

In crashes involving fully automated vehicles, the manufacturer is usually considered most responsible. As autonomous vehicles become more common, we’re seeing responsibility shift from drivers to the companies that make these vehicles. If a defective part causes a collision, the companies that make those components can be held liable alongside the vehicle manufacturers. Manufacturers can reduce their liability by following safety standards and thoroughly testing before selling their vehicles.

Vehicle manufacturers have the responsibility to ensure their products are safe and don’t pose unreasonable risks. Self-driving car manufacturers who don’t meet safety standards can be held liable if an accident happens because of their negligence.

Determining liability for self-driving cars gets complicated, with different parties having different levels of responsibility. Watch for hand signals and other indicators that can alert you to what autonomous vehicles might do next on the road.

Driver Responsibility

In partially autonomous vehicles, responsibility is typically shared between you and the manufacturer. As vehicles become more autonomous, understanding your responsibility as a driver becomes critical. You might be held responsible if you stop paying attention or use the autonomous features in ways they weren’t designed for.

As self-driving technology continues to evolve, the question of driver responsibility will keep changing and require ongoing legal and ethical discussions.

Legal Frameworks Governing Self Driving Cars

The laws around self-driving cars are always changing. The National Highway Traffic Safety Administration (NHTSA) is the federal agency that’s supposed to ensure safety standards for autonomous vehicles. Federal regulations enforced by the NHTSA are important for keeping autonomous vehicles safe. But right now, there aren’t any national safety standards specifically for self-driving cars established by the NHTSA. Self-driving companies don’t have to prove their vehicles are safe before putting them on the road.

Federal guidelines are trying to simplify regulations for autonomous vehicles, focusing on safety and innovation. Without proper federal oversight, unsafe self-driving systems could be deployed and cause preventable accidents. The legal situation for autonomous vehicles keeps evolving, with states making various regulations that can differ quite a bit. Some states have passed laws specifically addressing testing and operating self-driving cars on public roads.

State regulations for autonomous vehicles vary significantly, with California having one of the most established systems. When passing wide autonomous vehicles going in the same direction as you are, make sure that you have plenty of space to pass. You may have to wait it out.

Legal challenges with self-driving cars often focus on establishing liability and determining negligence. There are ongoing liability issues related to self-driving cars, focusing on who’s accountable—manufacturers or software developers. Lawmakers are struggling to develop regulations that ensure safety and address liability concerns for self-driving cars.

Insurance Implications for Self Driving Car Accidents

Insurance liability and self-driving accidents will be a hot topic in the coming years. Self-driving car accidents have major implications for liability policies and what consumers expect from auto insurance.

Insurance policies may need to change to handle situations where there’s no human driver in fully autonomous vehicles. There might eventually be a universal liability insurance model where autonomous vehicle manufacturers cover all accidents involving their cars.

Technological Challenges and Solutions

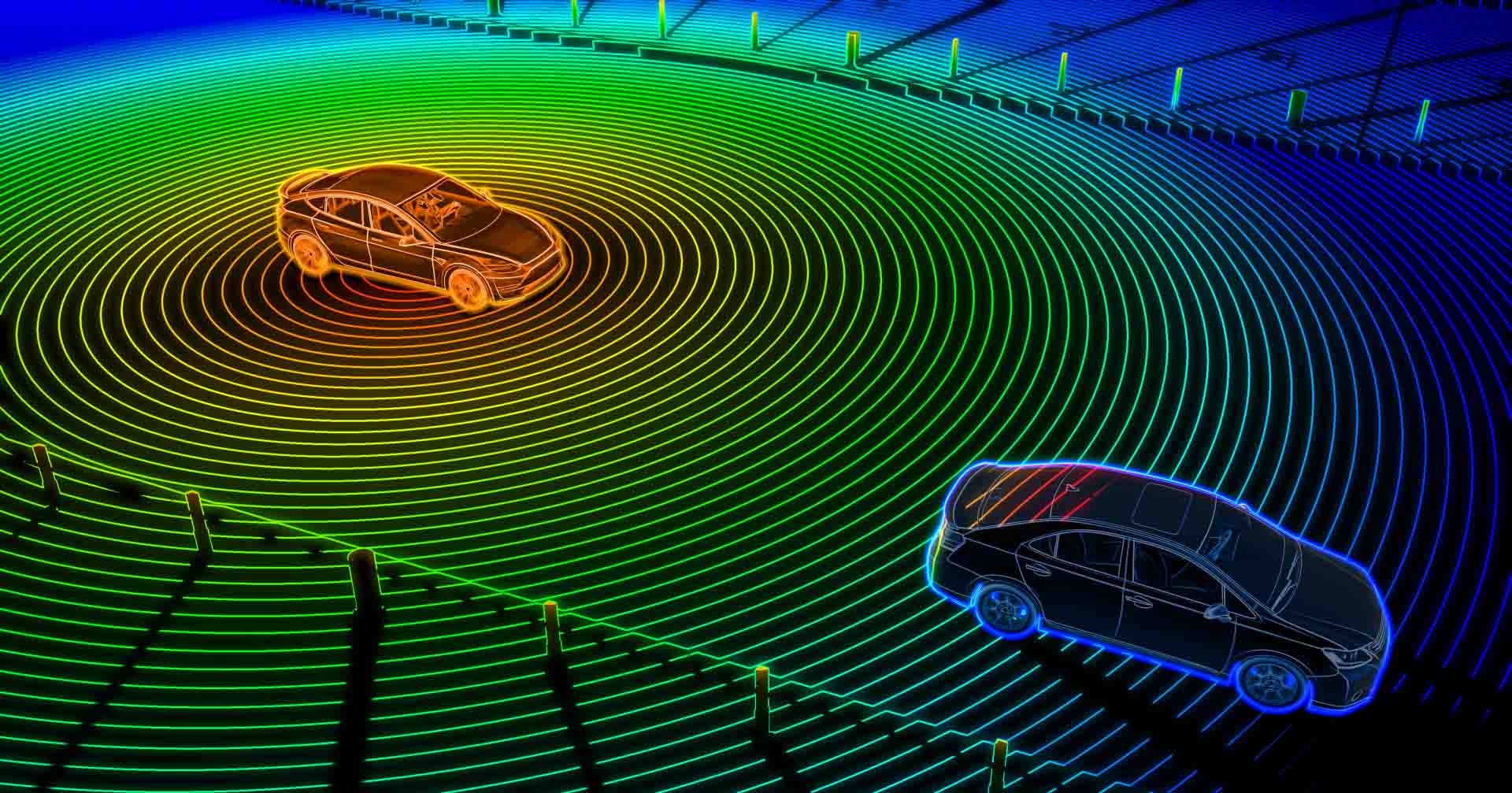

Self-driving cars use radar and lidar technologies, giving them a detailed view of their surroundings that goes beyond what humans can see. But the perception systems in self-driving cars can misinterpret road signs, which might lead to accidents. Computer systems in autonomous vehicles can make unique errors that are different from human driving mistakes.

Despite all the advances, we’re still not sure about the safety benefits of self-driving cars—they remain largely unproven. For autonomous vehicles to succeed, they need to build public trust and show they’re safe.

Artificial intelligence plays a crucial role in the functionality of self-driving cars, ensuring efficient operation across various applications and road conditions.

Public Perception and Trust in Autonomous Vehicles

After the Xiaomi SU7 accident, Xiaomi’s stock dropped noticeably, showing investor worries about their self-driving technology’s safety. The stock price fell by over 5% as people became concerned about safety and public opinion.

Determining who’s responsible in self-driving car accidents affects many areas of society. These accidents significantly influence how the public views and trusts autonomous vehicle technology. The relationship between self-driving car accidents, changes in public perception, and trust in autonomous vehicles will shape the industry’s future.

If you or someone you love has been injured in a motor vehicle accident involving a self-driving car, either as the occupant of a regular vehicle or as the operator of an autonomous vehicle, seek legal assistance right away to understand your options.

Future of Self Driving Car Legislation

Legal precedents about liability in self-driving car accidents will evolve as these vehicles become more common. A common question is whether we need federal or state liability legislation. Additionally, people are asking what form such legislation should take. Unresolved liability issues could delay or prevent widespread consumer use of driverless cars.

Regulators face challenges as they try to create effective safety regulations and standards for autonomous vehicles. The National Highway Traffic Safety Administration hasn’t established national safety standards for self-driving cars.

Public resistance to self-driving cars has increased, driven by fears about safety and a lack of trust in the technology. The driving environment is expected to become safer with increased autonomous vehicle adoption when operating in self-driving mode.

Summary

In summary, the rise of self-driving cars brings great potential but also significant challenges, especially regarding liability. Recent high-profile accidents, like the Xiaomi SU7 crash, show how complicated it is to determine who’s responsible. Understanding the contributing factors, legal frameworks, and technology limitations is crucial for advancing this technology safely.

Looking ahead, it’s clear that strong legislation, better technology, and increased public trust will be essential for widespread adoption of self-driving cars. By addressing these challenges directly, we can create a safer and more efficient transportation future.

Frequently Asked Questions

Who is liable if Tesla autopilot crashes?

Tesla is generally not held liable for crashes involving Autopilot, as courts often blame human error. However, if someone can prove that Autopilot didn’t work as advertised, Tesla might still be liable.

How many self-driving cars have crashed in 2025?

In 2025, there have been 58 crashes as of February of 2025.

Has there ever been a self-driving car accident?

Yes, there has been a self-driving car accident, including a notable fatal collision in San Francisco involving a fully autonomous vehicle with no one in the driver’s seat. This incident marks a significant moment in the history of autonomous vehicles.

Who is typically held liable in self-driving car accidents?

Typically, liability in self-driving car accidents can fall on manufacturers, software developers, and vehicle owners, depending on the specific circumstances. This determination often requires thorough investigations of the vehicle’s hardware and software.